Embracing AI in Cybersecurity Assessments

Introduction

What separates a resilient organization from a vulnerable one? The ability to see and act on risk faster than attackers do. For years, penetration tests, red team exercises, and compliance audits have been the cornerstone of proactive defense.

These approaches remain essential, but their limitations are becoming harder to ignore. They are labor-intensive, costly, and too slow. By the time an assessment is completed, the threat landscape may have already shifted. This is where AI, used responsibly, becomes a force multiplier.

Why AI Matters in Cybersecurity Assessments

Malicious actors are already a step ahead. Reports from Palo Alto Networks and CrowdStrike confirm that criminal groups and state-sponsored actors are embedding AI directly into their attack playbooks. They’re using generative AI to craft highly convincing phishing lures, agentic AI to automate reconnaissance, and even polymorphic malware that evades traditional detection. If attackers are embracing these tools, defenders can’t afford to remain stuck in old playbooks.

Traditional assessment methods rely on manually combing through logs, mapping networks, and reviewing compliance checklists. While these practices are effective, they are too rigid to keep up with today’s dynamic environment and rapid pace of innovation. A misconfigured cloud bucket can go from exposure to exploitation in hours!

This is where AI delivers its real value proposition. Imagine an assessment approach that continuously monitors your environment, correlates vulnerabilities with real-time threat intelligence, and prioritizes findings based on actual business impact. This is no longer a future vision, it’s becoming a reality.

Practical Use Cases of AI in Cybersecurity Assessments

AI is already proving its value by making cybersecurity assessments more dynamic, scalable, and deeply insightful. Here’s how it’s being applied in practice:

Smarter Threat Modeling

Threat modeling has traditionally relied on workshops, whiteboards, and static diagrams. While valuable, these approaches are limited by human imagination and time. AI can take this process further by analyzing architecture diagrams, configuration files, and historical attack data to suggest likely attack paths. For example, it might flag how a seemingly harmless exposed API, when combined with weak IAM policies, could lead to lateral movement into sensitive systems. This moves threat modeling from theory-driven to evidence-driven, allowing assessments to focus on the attack vectors most likely to be exploited.

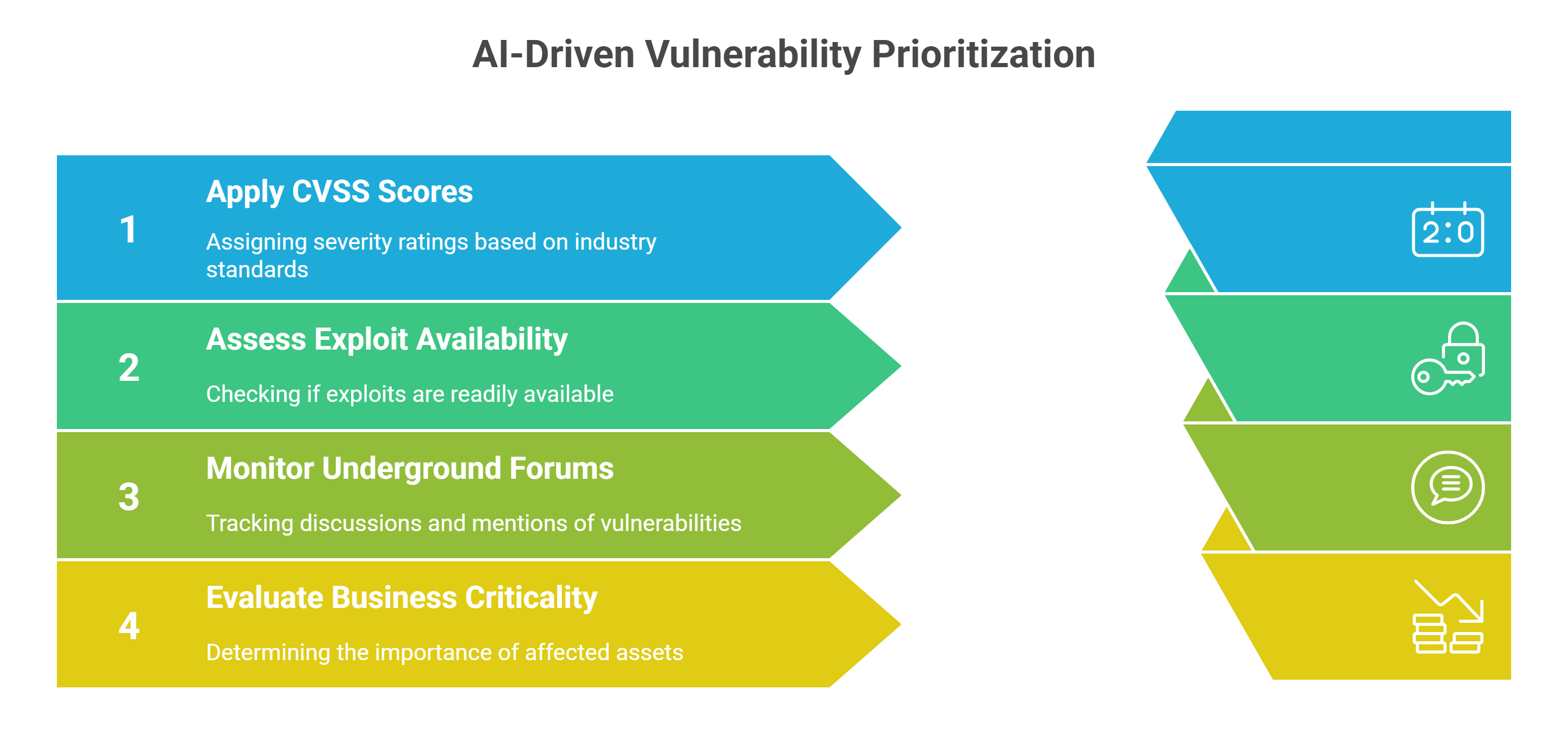

Contextual Vulnerability Prioritization

Vulnerability scans often overwhelm organizations with thousands of findings, many of which are irrelevant. AI can apply contextual intelligence by weighing not just CVSS scores but also exploit availability, chatter on underground forums, and the business criticality of affected assets. A medium-severity flaw in a payroll application, for instance, may be ranked above a critical bug on a test server. This reprioritization ensures that assessments highlight vulnerabilities that matter most, helping teams focus resources where the actual business risk lies.

Red Team Augmentation

Red teamers excel at creativity, but they are often slowed down by repetitive reconnaissance. Agentic AI can automate subdomain enumeration, credential spraying simulations, OSINT scraping and even generate realistic phishing lures. This gives human operators more time to think like adversaries and pursue higher-value attack chains. In practice, an AI-assisted red team assessment might simulate a phishing campaign tailored to a specific organization’s language style, producing scenarios far more convincing than generic templates.

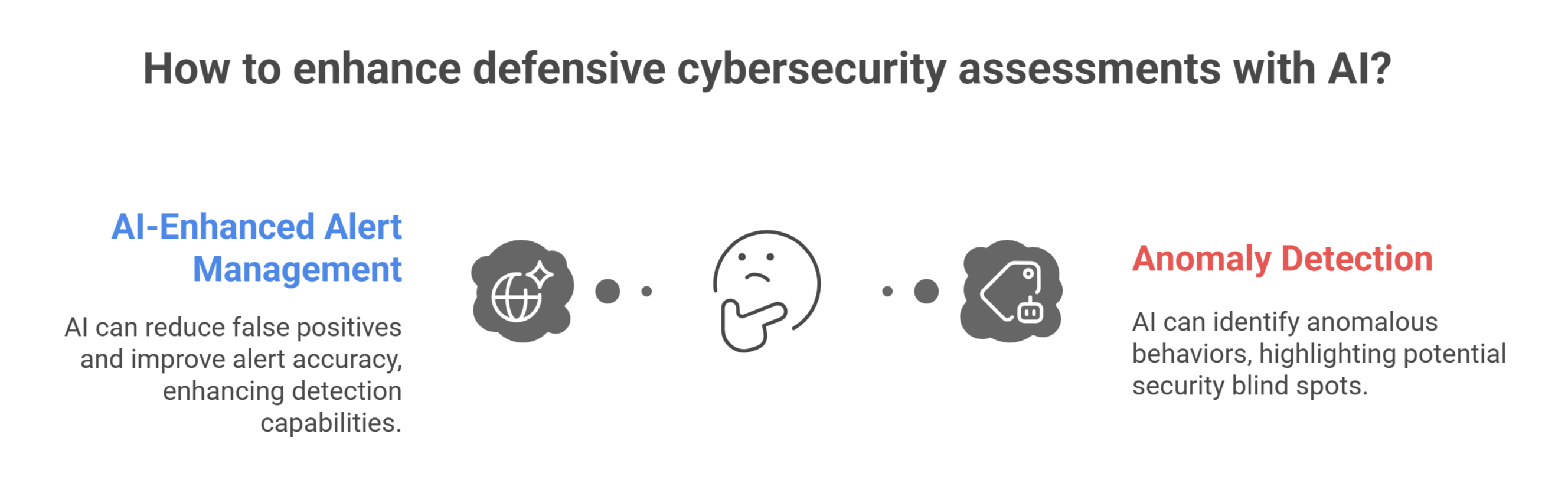

Blue Team and SOC Support

Defensive assessments benefit equally from AI. Traditional SIEMs and log management tools generate an overwhelming volume of alerts, many of which are false positives. AI can learn baseline behaviors such as, normal user logins, system activity, or network traffic and flag anomalies more accurately. During an assessment, this allows blue teams to validate whether their detection and response capabilities are working as intended. For example, if an AI system flags anomalous lateral movement but the SOC’s monitoring doesn’t, the assessment highlights a blind spot that needs addressing.

Risk Communication and Reporting

Assessment findings are only useful if they drive action, but technical reports often fail to resonate with executives or boards. Natural language models can bridge this gap by transforming raw findings into clear, role-specific narratives. An AI-generated summary might explain to a CISO how a vulnerability in cloud storage could impact regulatory compliance, while giving engineers detailed remediation steps. This translation ensures that assessments lead to informed decisions instead of confusion.

Best Practices for Embracing AI in Cybersecurity Assessments

Recognizing the need to embrace AI does not mean ignoring its potential risks. On the contrary, the real lesson is to adopt AI deliberately while putting guardrails in place that minimizes unintended consequences. The goal is not reckless adoption, but responsible integration. With that in mind, several best practices can help organizations navigate this shift effectively:

Start with Targeted Use Cases

The best way to adopt AI in assessments is incrementally. Begin with narrow, high-value tasks such as vulnerability triage, phishing simulations, or log analysis. This allows teams to gain confidence in the tools, measure results, and identify weaknesses before scaling to broader assessments.

Keep Humans in the Loop

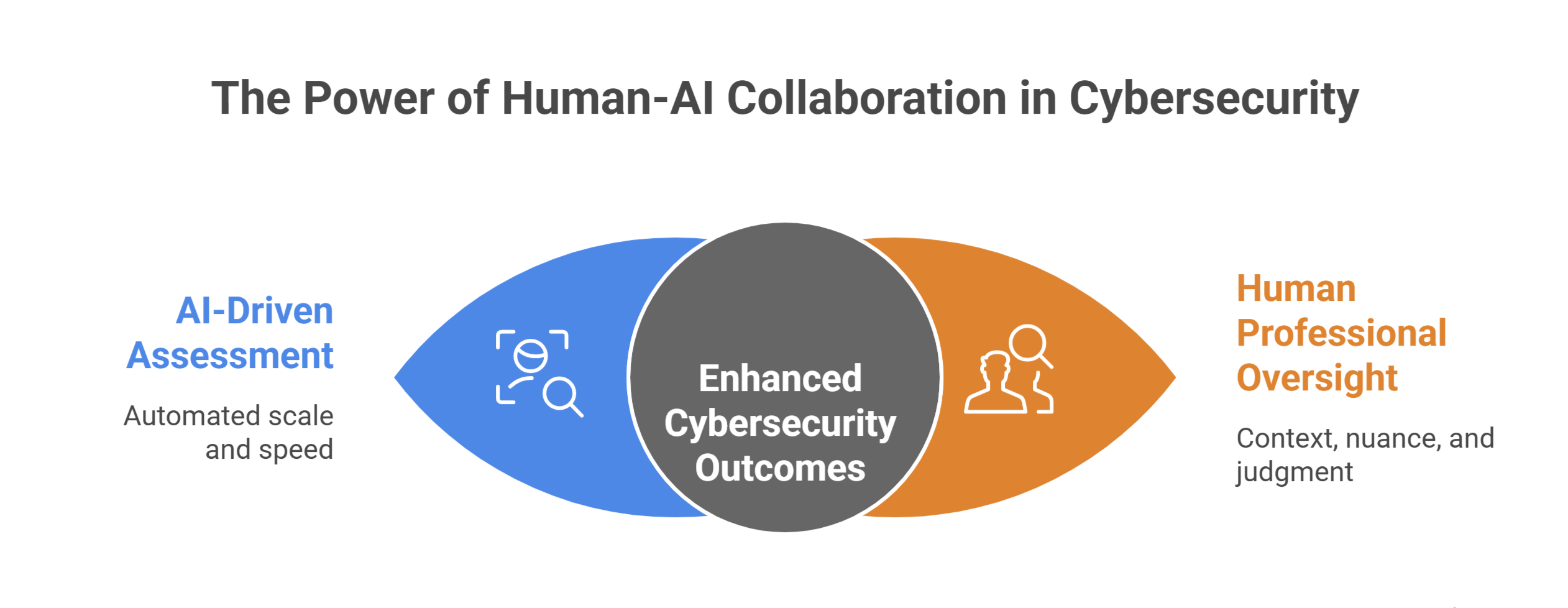

AI is powerful, but it is not infallible. Models can misclassify data, produce false positives, or even hallucinate entirely. For this reason, AI should never function as a fully autonomous assessor. Every finding must be validated by experienced professionals who can apply context, nuance, and judgment. The right model is a blend, whereby AI delivers scale and speed, while an human agent ensure accuracy, relevance, and strategic alignment.

Demand Transparency and Explainability

Avoid black-box AI models that can’t justify their decisions. Whether for technical teams or auditors, explainability is key to building trust. If an AI system flags a vulnerability as “critical,” assessors should be able to understand why it has done so.

Integrate With Existing Frameworks

AI adoption should not replace established structures like NIST CSF, MITRE ATT&CK, or ISO 27001. Instead, it should augment them. Mapping AI-driven assessments to recognized frameworks ensures consistency and credibility while making results easier to benchmark.

Establish Clear Governance

Set policies that define how and when AI is used in assessments, who validates outputs, and what data can be shared. Governance should also address security concerns, including how to protect AI systems themselves from exploitation or tampering.

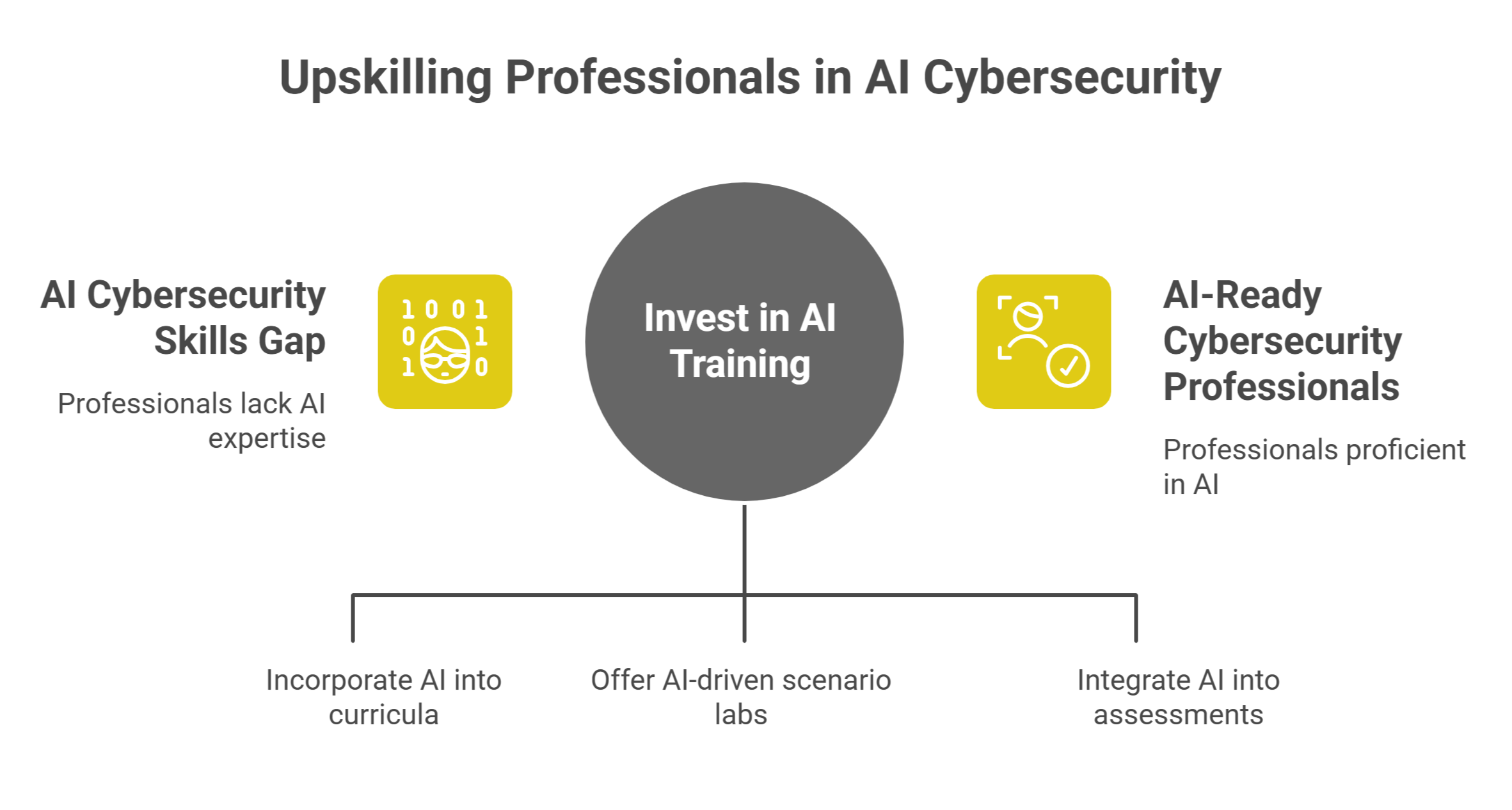

Invest in Skills and Training

Perhaps the most important step is preparing professionals to work with AI. Tools are only as effective as the people wielding them, and too many security programs still treat AI as either a gimmick or a threat. That mindset has to change. Training programs, bootcamps, and certifications should explicitly incorporate AI into their curricula. Platforms like Hack The Box have already taken the lead, offering red teaming paths and labs dedicated to AI-driven scenarios. SANS has launched entire courses on offensive AI, while universities and corporate programs are beginning to experiment with AI-integrated assessments. This is the best path forward, and industry-wide adoption should be encouraged rather than resisted.

The idea of integrating new technology into learning is not without precedent. When calculators first appeared, schools banned them, fearing they would weaken students’ ability to perform basic arithmetic. Yet over time, calculators became indispensable, freeing learners to focus on higher-order problem solving. AI in cybersecurity education is at the same crossroads. Failing to embrace AI risks creating professionals who are technically skilled but strategically unprepared for the realities of modern threats. Normalizing AI in the classroom and in certification exams just as we did with calculators, will ensure the next generation of defenders is prepared for an adversary that is already training with these tools.

Conclusion: Looking Ahead

The future of cybersecurity assessments should not be reduced to the familiar narrative of machines replacing humans in the workforce. Instead, it must be viewed through the lens of augmentation. The true purpose of embracing AI in assessments is not to automate human expertise away, but to amplify it.

The organizations that thrive in this new era will be those that recognize AI not as a novelty or optional add-on, but as an indispensable ally in the assessment process. Those who resist the future risk being left behind and those who embrace it responsibly will help define the next era of cybersecurity resilience.

References

CrowdStrike. (2024). AI-powered cyberattacks: The next frontier of threats. CrowdStrike. https://www.crowdstrike.com/en-us/cybersecurity-101/cyberattacks/ai-powered-cyberattacks/

GovInfoSecurity. (2025, August 22). Attackers use AI to build ransomware at rapid scale. Information Security Media Group. https://www.govinfosecurity.com/attackers-use-ai-to-build-ransomware-at-rapid-scale-a-29552

Hack The Box. (n.d.). Introduction to Red Teaming AI. Hack The Box Academy. https://academy.hackthebox.com/course/preview/introduction-to-red-teaming-ai

Palo Alto Networks Unit 42. (2024, September 9). Agentic AI threat analysis: Nine emerging attack scenarios. Unit42. https://unit42.paloaltonetworks.com/agentic-ai-threats/

SANS Institute. (n.d.). SEC535: Offensive AI — Attack Tools and Techniques. SANS Institute. https://www.sans.org/cyber-security-courses/offensive-ai-attack-tools-techniques

Shavit, Y., et al. (2025, April 29). Threats and opportunities of agentic AI: A survey. arXiv preprint arXiv:2504.19956. https://arxiv.org/pdf/2504.19956

Watters, A. (2015, March 12). A brief history of calculators in the classroom. Hack Education. https://hackeducation.com/2015/03/12/calculators

Zhang, Z., et al. (2025, September 26). “Your AI, My Shell”: Demystifying Prompt Injection Attacks on Agentic AI Coding Editors arXiv preprint arXiv:2509.22040. https://arxiv.org/pdf/2509.22040